Web scraping is a technique that makes it possible to obtain data from a particular website. Websites use HTML to describe their content. If the HTML is clean and semantic, it's easy to use it to locate useful data.

You'll typically use a web scraper to obtain and monitor data and track future changes to it.

jQuery Concepts Worth Knowing Before You Use Cheerio

jQuery is one of the most popular JavaScript packages in existence. It makes it easier to work with the Document Object Model (DOM), handle events, animation, and more. Cheerio is a package for web scraping that builds on top of jQuery—sharing the same syntax and API, while making it easier to parse HTML or XML documents.

Before you learn how to use Cheerio, it is important to know how to select HTML elements with jQuery. Thankfully, jQuery supports most CSS3 selectors which makes it easier to grab elements from the DOM. Take a look at the following code:

$("#container");

In the code block above, jQuery selects the elements with the id of "container". A similar implementation using regular old JavaScript would look something like this:

document.querySelectorAll("#container");

Comparing the last two code blocks, you can see the former code block is much easier to read than the latter. That is the beauty of jQuery.

jQuery also has useful methods like text(), html(), and more that make it possible to manipulate HTML elements. There are several methods you can use to traverse the DOM, like parent(), siblings(), prev(), and next().

The each() method in jQuery is very popular in many Cheerio projects. It allows you to iterate over objects and arrays. The syntax for the each() method looks like this:

$(<element>).each(<array or object>, callback)

In the code block above, callback runs for each iteration of the array or object argument.

Loading HTML With Cheerio

To begin parsing HTML or XML data with Cheerio, you can use the cheerio.load() method. Take a look at this example:

const $ = cheerio.load('<html><body><h1>Hello, world!</h1></body></html>');

console.log($('h1').text())

This code block uses the jQuery text() method retrieves the text content of the h1 element. The full syntax for the load() method looks like this:

load(content, options, mode)

The content parameter refers to the actual HTML or XML data you pass the load() method. options is an optional object that can modify the behavior of the method. By default, the load() method introduces html, head, and body elements if they're missing. If you want to stop this behavior, make sure that you set mode to false.

Scraping Hacker News With Cheerio

The code used in this project is available in a GitHub repository and is free for you to use under the MIT license.

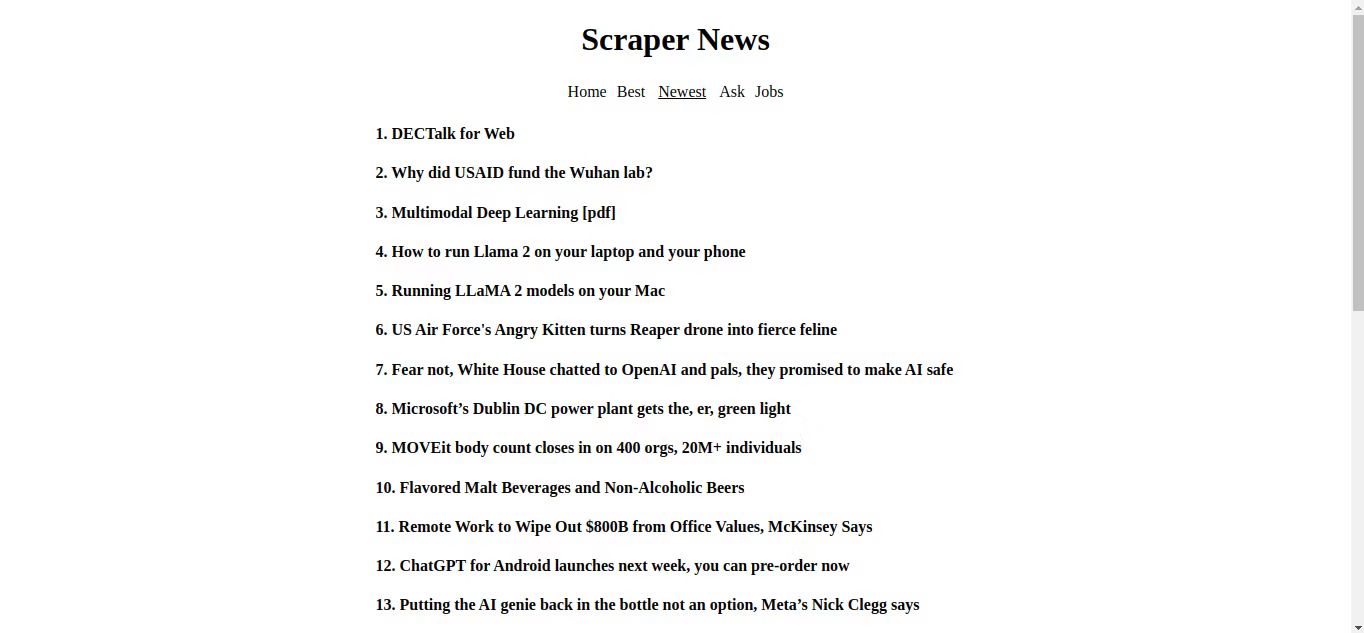

It's time to combine everything you have learned thus far and create a simple web scraper. Hacker News is a popular website for entrepreneurs and innovators. It is also a perfect website to harness your web scraping skills on because it loads fast, has a very simple interface, and does not serve any ads.

Make sure you have Node.js and the Node Package Manager running on your machine. Create an empty folder, then a package.json file, and add the following JSON inside the file:

{

"name": "web-scraper",

"version": "1.0.0",

"description": "",

"main": "index.js",

"scripts": {

"start": "nodemon index.js"

},

"author": "",

"license": "MIT",

"dependencies": {

"cheerio": "^1.0.0-rc.12",

"express": "^4.18.2"

},

"devDependencies": {

"nodemon": "^3.0.1"

}

}

After doing that, open the terminal and run:

npm i

This should install the necessary dependencies you need to build the scraper. These packages include Cheerio for parsing the HTML, ExpressJS for creating the server, and—as a development dependency—Nodemon, a utility that listens for changes in the project and automatically restarts the server.

Setting Things Up and Creating the Necessary Functions

Create an index.js file, and in that file, create a constant variable called "PORT". Set PORT to 5500 (or whatever number you choose), then import the Cheerio and Express packages respectively.

const PORT = 5500;

const cheerio = require("cheerio");

const express = require("express");

const app = express();

Next, define three variables: url, html, and finishedPage. Set url to the Hacker News URL.

const url = 'https://news.ycombinator.com';

let html;

let finishedPage;

Now create a function called getHeader() that returns some HTML that the browser should render.

function getHeader(){

return `

<div style="display:flex; flex-direction:column; align-items:center;">

<h1 style="text-transform:capitalize">Scraper News</h1>

<div style="display:flex; gap:10px; align-items:center;">

<a href="/" id="news" onClick='showLoading()'>Home</a>

<a href="/best" id="best" onClick='showLoading()'>Best</a>

<a href="/newest" id="newest" onClick='showLoading()'>Newest</a>

<a href="/ask" id="ask" onClick='showLoading()'>Ask</a>

<a href="/jobs" id="jobs" onClick='showLoading()'>Jobs</a>

</div>

<p class="loading" style="display:none;">Loading...</p>

</div>

`}

The create another function getScript() that returns some JavaScript for the browser to run. Make sure you pass in the variable type as an argument when you call it.

function getScript(type){

return `

<script>

document.title = "${type.substring(1)}"

window.addEventListener("DOMContentLoaded", (e) => {

let navLinks = [...document.querySelectorAll("a")];

let current = document.querySelector("#${type.substring(1)}");

document.body.style = "margin:0 auto; max-width:600px;";

navLinks.forEach(x => x.style = "color:black; text-decoration:none;");

current.style.textDecoration = "underline";

current.style.color = "black";

current.style.padding = "3px";

current.style.pointerEvents = "none";

})

function showLoading(e){

document.querySelector(".loading").style.display = "block";

document.querySelector(".loading").style.textAlign = "center";

}

</script>`

}

Finally, create an asynchronous function called fetchAndRenderPage(). This function does exactly what you think—it scrapes a page in Hacker News, parses and formats it with Cheerio, then sends some HTML back to the client for rendering.

async function fetchAndRenderPage(type, res) {

const response = await fetch(`${url}${type}`)

html = await response.text();

}

On Hacker News, there are different types of posts available. There is the "news", which is the stuff on the front page, posts seeking answers from other Hacker News members have the label, "ask". Trending posts have the label "best", the latest posts have the label "newest" and posts regarding job vacancies have the label "jobs".

fetchAndRenderPage() fetches the list of posts from the Hacker News page based on the type you pass in as an argument. If the fetch operation is successful, the function binds the html variable to the response text.

Next, add the following lines to the function:

res.set('Content-Type', 'text/html');

res.write(getHeader());

const $ = cheerio.load(html);

const articles = [];

let i = 1;

In the code block above, the set() method sets the HTTP header field. The write() method is responsible for sending a chunk of the response body. The load() function takes in html as an argument.

Next, add the following lines to select the respective children of all elements with the class "titleline".

$('.titleline').children('a').each(function(){

let title = $(this).text();

articles.push(`<h4>${i}. ${title}</h4>`);

i++;

})

In this code block, each iteration retrieves the text content of the target HTML element and stores it in the title variable.

Next, push the response from the getScript() function into the articles array. Then create a variable, finishedPage, that will hold the finished HTML to send to the browser. Lastly, use the write() method to send finishedPage as a chunk and end the response process with the end() method.

articles.push(getScript(type))

finishedPage = articles.reduce((c, n) => c + n);

res.write(finishedPage);

res.end();

Defining the Routes to Handle GET Requests

Right under the fetchAndRenderPage function, use the express get() method to define the respective routes for different types of posts. Then use the listen method to listen for connections to the specified port on your local network.

app.get('/', (req, res) => {

fetchAndRenderPage('/news', res);

})

app.get('/best', (req, res) => {

fetchAndRenderPage('/best', res);

})

app.get('/newest', (req, res) => {

fetchAndRenderPage('/newest', res);

})

app.get('/ask', (req, res) => {

fetchAndRenderPage('/ask', res);

})

app.get('/jobs', (req, res) => {

fetchAndRenderPage('/jobs', res);

})

app.listen(PORT)

In the code block above, every get method has a callback function that calls the fetchAndRenderPage function passing in respective types and the res objects.

When you open your terminal and run npm run start. The server should start up, then you can visit localhost:5500 in your browser to see the results.

Congratulations, you just managed to scrape Hacker News and fetch the post titles without the need for an external API.

Taking Things Further With Web Scraping

With the data you scrape from Hacker News, you can create various visualizations like charts, graphs, and word clouds to present insights and trends in a more digestible format.

You can also scrape user profiles to analyze the reputation of users on the platform based on factors such as upvotes received, comments made, and more.